Trusted Data or Hallucinated Outcomes?

Trusted Data or Hallucinated Outcomes? Everyone is racing to show how fast they can produce results.

Speed feels powerful. Popularity fuels confidence. But confidence built on unverified data is a dangerous illusion.

AI models make stuff up. How can hallucinations be controlled?

⚠️ Trusted Data or Hallucinated Outcomes: Real-World AI Mistakes That Shook Industries

Artificial intelligence is transforming how we work, decide, and deliver. Yet, every success story hides a cautionary one — where poor data hygiene and unverified AI outputs led to public embarrassment, financial losses, and legal exposure.

From lawyers citing fabricated cases to airlines misinforming customers, these real incidents reveal a simple truth: when AI systems are trained or deployed without trusted data and continuous data quality management, the results may be fast — but never reliable.

Each of these cases underscores the urgent need for AI governance, trusted data pipelines, and responsible automation. When AI hallucinates, reputation, regulation, and revenue are all at risk.

🧠 Real World AI Hallucination Impact Examples

Artificial intelligence is transforming how we work, decide, and deliver. Yet, every success story hides a cautionary one — where poor data hygiene and unverified AI outputs led to public embarrassment, financial losses, and legal exposure.

From lawyers citing fabricated cases to airlines misinforming customers, these real incidents reveal a simple truth: when AI systems are trained or deployed without trusted data and continuous data quality management, the results may be fast — but never reliable.

Each of these cases underscores the urgent need for AI governance, trusted data pipelines, and responsible automation. When AI hallucinates, reputation, regulation, and revenue are all at risk.

| Incident Title (with Source Link) | Industry | Impact Description | Example Incident |

|---|---|---|---|

| ChatGPT Generates Fake Case Law, Lawyer Sanctioned | Legal | Submission of fictitious case law in court filings | A lawyer submitted AI-generated legal citations that didn’t exist. The judge sanctioned him and publicly rebuked reliance on unverified AI content. |

| Air Canada Chatbot Hallucinates Refund Policy, Airline Liable | Travel & Customer Service | Misleading refund policy communicated by AI chatbot | An airline chatbot invented a refund rule. The court held the company liable for misinformation produced by its own AI. |

| AI Chatbots Spread False Medical Advice, Study Warns | Healthcare | Dissemination of false medical information | A major study revealed that healthcare chatbots frequently hallucinate symptoms, treatments, and clinical data, risking patient safety. |

| Google’s Gemini AI Demo Misled Viewers | Technology & Marketing | Misrepresentation of AI capabilities in promotional content | Google admitted its Gemini demo was scripted and edited, highlighting how marketing-driven AI narratives can distort public trust. |

| CNET Publishes Error-Filled AI Financial Articles | Journalism & Media | Publication of erroneous financial advice articles | CNET quietly published dozens of AI-generated financial pieces riddled with factual errors, damaging credibility and reader confidence. |

💡 Why These Incidents Matter

Each headline reinforces one principle: trusted data must come before trusted automation.

AI doesn’t know truth; it predicts patterns. Without strong data validation, continuous quality monitoring, and human-in-the-loop governance, organizations risk turning innovation into liability.

As ServiceNow and other enterprise platforms expand into AI-oriented workspaces, these lessons demand attention. Data integrity isn’t optional — it’s operational.

⚠️ Trusted Data vs. Hallucinated Outcomes: The Hidden Risk Behind AI Speed

The Economist recently explored the tradeoff of reducing hallucinations and limiting capabilities. AI models can query more data, perform zero-copy analysis, and process terabytes instantly. Yet speed means nothing if the data itself cannot be trusted. Without trusted data at the front end and data quality controls after ingestion, every large-language model runs the same risk—hallucinations.

⚡ When Speed Outruns Truth

AI models now query limitless datasets, perform zero-copy analysis, and process terabytes of information in seconds. The potential feels infinite. Yet, speed means nothing if the data itself cannot be trusted. Without trusted data at the front end and data quality validation after ingestion, every large-language model — no matter how advanced — faces the same predictable risk: AI hallucinations.

Think it can’t happen in your data? Yes, Virginia, even in yours.

🧠 The Science Behind AI Hallucinations and Why Data Quality Matters

Research from MIT Technology Review and Stanford HAI reveals how hallucinations emerge when language models encounter incomplete or inconsistent information. The implications are serious — especially in healthcare, law, and enterprise automation, where one false assumption can ripple into costly or dangerous decisions.

Because AI doesn’t know truth — it predicts patterns.

When what it learns is outdated, biased, or incomplete, it confidently invents the missing pieces.

The results sound plausible, even authoritative, yet they are fundamentally wrong.

That’s the danger of hallucinated outcomes—systems that sound smart but think wrong.

In today’s AI-driven enterprises, the line between insight and illusion is drawn by trusted data. Trusted data isn’t just accurate; it’s verifiable, contextual, and continuously governed. It powers AI and automation with real intelligence, ensuring every insight, prediction, and decision aligns with reality—not illusion.

🚨 More Data ≠ More Accuracy

Modern systems pride themselves on handling more queries, integrating bigger datasets, and achieving zero-copy efficiency. Ironically, those same strengths can magnify weak data. Every inconsistency or missing value becomes replicated across multiple layers of automation and analytics. Zero-Copy with Data Quality is power and risk management combined.

The illusion of intelligence is subtle but powerful:

- The answers look precise.

- The dashboards appear polished.

- The summaries read convincingly.

- And yet, decisions go off course.

That is the danger of hallucinated outcomes — systems that sound smart but think wrong.

What We Mean by “Trusted Data”

Trusted data is information you can verify, trace, and act upon confidently.

It meets five critical dimensions of quality:

- Completeness – nothing vital missing.

- Accuracy – reflects real-world truth.

- Consistency – harmonized across sources.

- Timeliness – up-to-date and relevant.

- Uniqueness – free of duplicates and conflicts.

When these principles are enforced through governance and AI-driven monitoring, the result is data integrity at scale.

When Data Lies — Understanding AI Hallucinations

AI doesn’t invent — it predicts.

But when trained on flawed data, it begins to hallucinate — generating false, confident answers.

These hallucinations don’t just misinform users; they erode trust in automation altogether. A system that is ingested with faults, simply accepts the faults as pattern. So errant decisions are an expected pattern. All the data vulnerabilities created by lack of review, use and data driven management become part of a large language model of lies.

Example: An AI incident bot suggested rebooting an already retired server. Why? Because the CMDB hadn’t been updated in six months.

⚠️ When Data Lies — Understanding AI Hallucinations

AI doesn’t invent truth — it predicts patterns. Yet when those patterns come from flawed or ungoverned data, the results quickly drift from reality. What starts as a simple data inconsistency becomes an AI hallucination — a confident, convincing, and completely wrong answer.

🧠 Why Poor Data Hygiene Becomes a Large Language Model Risk

Poor data hygiene is more than a maintenance issue; it’s a multiplier of misinformation. Every missing field, outdated record, and unchecked inconsistency quietly trains the model to accept bad data as normal. When systems ingest errors, they don’t question them — they replicate them.

Over time, those unchecked flaws evolve into what can only be described as a large language model of lies.

Because AI learns patterns, not principles, it simply reinforces what it sees most often — even when those inputs are wrong. The outcome? An intelligent system that sounds right but decides wrong.

Example: An AI incident bot recommended rebooting a decommissioned server because the CMDB hadn’t been updated in six months. The AI didn’t fail — the data did.

🔍 The Chain Reaction of Weak Data Quality

Bad data compounds faster than good data. Without data quality management, the system doesn’t just store errors — it spreads them across every connected workflow, report, and prediction.

- Missing relationships in a CMDB lead to false impact analysis.

- Inconsistent timestamps distort SLA performance metrics.

- Outdated user data reroutes tasks and alerts to inactive accounts.

Each small inaccuracy becomes an algorithmic assumption, and every assumption becomes an automated decision.

⚙️ Trusted Data Turns AI Hallucination into Explainable Intelligence

Trusted data is the antidote to AI hallucinations. It replaces guesswork with governance.

When data is verified, consistent, and current, machine learning operates on evidence, not assumption.

With ongoing data validation and AI governance, organizations can explain why AI made a decision — not just accept what it decided.

Trusted data doesn’t just improve accuracy; it safeguards credibility. It transforms AI from an unpredictable storyteller into a reliable decision partner, capable of supporting automation, analytics, and innovation with confidence.

How Trusted Data Empowers Business Workflows

Every business workflow — from ticket routing to risk prediction — improves when data is trustworthy.

For Service Management:

- Accurate Configuration Items mean change risk models are correct.

- Incident routing hits the right group the first time.

- SLA metrics reflect reality, not spreadsheet errors.

- AI insights guide analysts to root cause faster.

For Agile Teams:

- Story data and test cases link correctly.

- Metrics show true sprint velocity.

- Predictive analytics identify blockers early.

When teams trust their data, they deliver faster, fail less, and innovate more confidently.

Building Trust at Scale with AI-Driven Data Quality

AI and automation don’t fix bad data — they amplify it.

That’s why modern platforms use AI to continuously validate and improve data before it’s used.

The AI-Driven Data Quality Loop follows four stages:

- Detect anomalies and inconsistencies in real time.

- Predict degradation trends using machine learning.

- Act with automated remediation tasks and governance workflows.

- Learn from every correction to strengthen future accuracy.

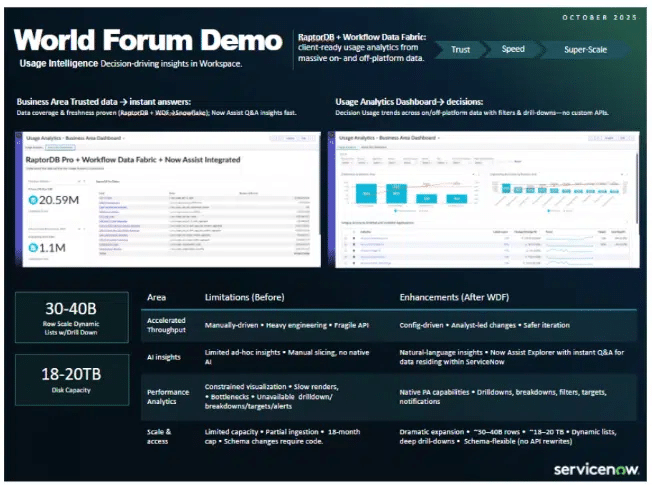

At New York World Summit, ServiceNow demonstrated a compelling Now Assist Usage Analytics offering

Data Quality in Usage Intelligence — Why it Matters

What it shows

- Coverage & freshness: record counts by domain, last-ingest latency, and schema drift flags.

- Completeness: % required fields populated; heatmaps down to table/field.

- Accuracy & validity: outliers, bad formats, failed rules (regex, ranges, referential integrity).

- Consistency & uniqueness: duplicates/conflicts across sources; key collisions.

- Timeliness: event-to-insight SLA, backlog depth, and late-arriving data.

How it works

- WDF + RaptorDB profiles data on ingest, stores rule results, and surfaces quality as PA indicators.

- Quality gates in flow-of-work: failed checks auto-create tasks/incidents with owners & severity.

- Now Assist makes it natural-language: “Why is Adoption red for EU?” → root-cause drill + remediation playbook.

- Drill-throughs from KPI → domain → table → field → offending records with audit evidence.

Decisions it unlocks (exec impact)

- Trustworthy KPIs for adoption, license optimization, incident deflection—no more “is this data right?” debates.

- Faster approvals (CAB, funding) with objective quality signals and evidence.

- Lower risk & rework by catching defects early; fewer rollbacks and false insights at scale (30–40B rows).

Suggested widgets

- DQ Scorecard: Completeness, Accuracy, Consistency, Uniqueness, Timeliness by domain.

- Freshness Tracker: ingest latency by source with SLA bands.

- Duplicate/Conflict Map: top keys with collisions and business impact.

- Quality → Outcome path: correlates DQ scores to adoption, incidents, or ROI.

Tagline: If you can’t trust the data, you can’t trust the dashboard. Usage Intelligence makes quality visible—and actionable.

ServiceNow’s Data Quality capabilities and Now Assist exemplify this model — ensuring that data powering automation, AI Search, and GenAI outcomes remains clean, explainable, and actionable.

Conclusion: Trust Before You Automate

AI without trusted data is like autopilot without navigation — fast, confident, and often wrong.

Before adopting any intelligent automation or GenAI model, organizations must ensure the integrity of the data feeding it.

The choice between trusted data and hallucinated outcomes isn’t philosophical — it’s operational.

And in the age of AI-driven decisions, it defines who leads and who follows.

Other Resources for Trusted Data or Hallucinated Outcomes?

- 6 DQ Dimensions: Complete Guide, Examples, Methods

- 8 DQ Core Dimensions: A Guide to Data Excellence – SixSigma.us

- Data Governance Institute (DGI) – A leading best practices data governance organization.

- Data Science Foundations Data Structures & Data Quality

- DAMA International – A global association for data management professionals.

- Data Fabric Governance & Quality

- DQ Driving Business Value– LinkedIn Course

- Gartner report on data fabric and data mesh

- Master Data Quality Dimensions

- ServiceNow World Forum NYC

- Workflow Data Fabric | ServiceNow® Data Fabric